Top 10 AI Models in August 2024

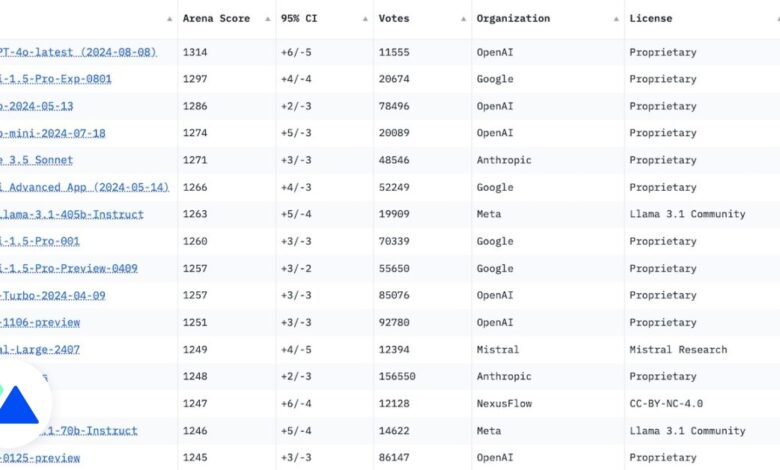

In terms of performance, OpenAI's language models are now competing with those developed by Google for its Gemini chatbot. At least that's what the recent update of the Chatbot Arenaa ranking designed by researchers and students from the prestigious University of Berkeley, in the United States, with the support of HuggingFace.

Updated in real time and assigning a performance score to each AI model, the Chatbot Arena aims to objectively prioritize text-generating AIs based on user contributions. Users are invited to evaluate, based on the same query, the responses provided by two AI models whose identities are hidden from them.

Top 10 Best Performing Language Models in August 2024

In July 2024, the company that created ChatGPT, aided by the deployment of GPT-4o mini, took five of the top ten positions in this ranking. In the process, it pushed most of its competitors out of the top 10, with the exception of Anthropic and Google. The Mountain View firm, which had been slightly behind until now, responded this month by launching Gemini 1.5 Flash: a model that is supposed to be more effective in its responses and which has been integrated into the free version of its eponymous chatbot.

Thanks to its cutting-edge technology, Google has therefore invited itself onto the podium of the Chatbot Arena, while also occupying several places of honor (6th, 8th and 9th). OpenAI, however, maintains a nice lead, placing two iterations on the podium and four in the top 10. In the rest of the ranking, Meta rises to seventh position with Meta Llama 3.1, while Anthropic loses two positions with Claude 3.5 Sonnet, its most advanced model.

- ChatGPT 4o Latest : 1314 (Elo score)

- Gemini 1.5 Pro 0801: 1297

- GPT-4o 0513: 1286

- GPT-4o mini 0718: 1274

- Claude 3.5 Sonnet: 1271

- Gemini Advanced: 1266

- Meta Llama 3.1: 1263

- Gemini 1.5 Pro: 1260

- Gemini 1.5 Pro Preview: 1257

- GPT-4 Turbo 0409: 1257

Chatbot Arena Ranking Criteria

Designed by the Large Model Systems Organization (LMSYS) which brings together American students and researchers, the Chatbot Arena uses the Elo system to rank generative models. But what is it, exactly? This evaluation system, used in several competitive disciplines such as esports or chess, has the advantage of being perfectly adapted to the duel principle on which the Chatbot Arena is based, as well as “to predict the outcome of the (next) match”according to LMSYS.

An Elo score can be thought of as a provisional rating, which changes based on performance. If, during a duel, a generative model with a high score, such as Gemini 1.5 Pro this month, suffers a defeat against a weaker opponent, such as Claude 3.5 Sonnet, it will lose points. Conversely, it will gain points if it wins against a supposedly stronger model.

The Elo system is also used by Artificial Analysis, the organization that offers a similar ranking for image-generating AIs, such as Midjourney or DALL-E.